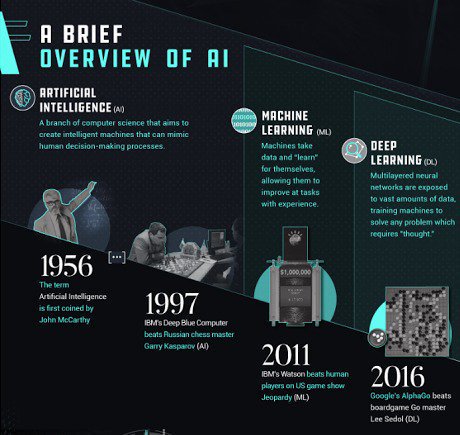

Overview of the history of AI

1. The Foundations (1940s–1950s)

-

Alan Turing (1950): Proposed the Turing Test to measure machine intelligence.

-

Early Computers: Machines like ENIAC and UNIVAC laid the groundwork for computational logic.

-

Logic Theorist (1956): Considered the first AI program, capable of solving mathematical problems.

2. The Birth of AI (1956–1970s)

-

Dartmouth Conference (1956): Term “Artificial Intelligence” was coined; AI officially became a field.

-

Early Optimism: Researchers believed machines would soon replicate human intelligence.

-

First AI Programs: Focused on problem-solving, chess-playing, and basic reasoning.

3. AI Winters (1970s–1980s)

-

Funding Cuts: Early AI failed to meet ambitious expectations, leading to reduced support.

-

Limited Computing Power: Hardware constraints slowed progress.

-

Renewed Interest: Expert systems (like MYCIN) brought some practical applications back.

4. Machine Learning Emerges (1980s–1990s)

-

Neural Networks Revival: Backpropagation algorithms revived interest in learning systems.

-

AI in Business: Expert systems and rule-based programs became practical tools.

-

Data Growth: Increasing digital data fueled more research in pattern recognition.

5. Modern AI (2000s–Present)

-

Deep Learning & Big Data: Neural networks achieve breakthroughs in image, speech, and language processing.

-

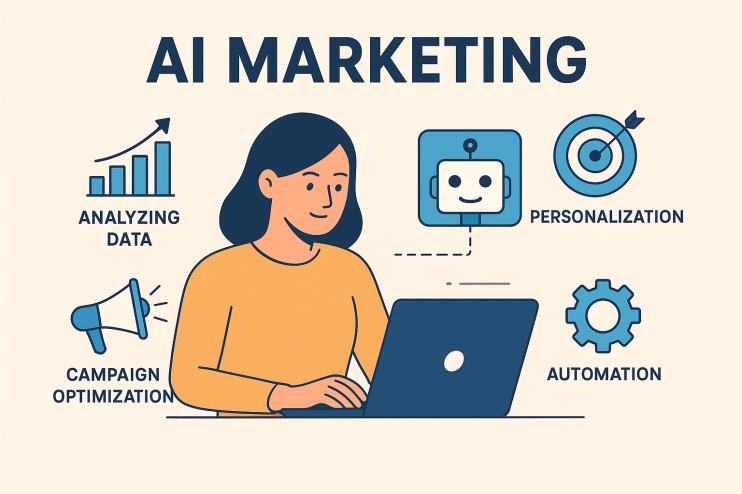

AI in Everyday Life: Personal assistants (Siri, Alexa), recommendation systems, autonomous vehicles.

-

Generative AI: Advanced models (like GPT) can write, generate images, and code.

6. Future Directions

-

General AI: Moving from narrow AI to systems capable of broader human-like reasoning.

-

Ethics & Governance: Ensuring AI is safe, fair, and transparent.

-

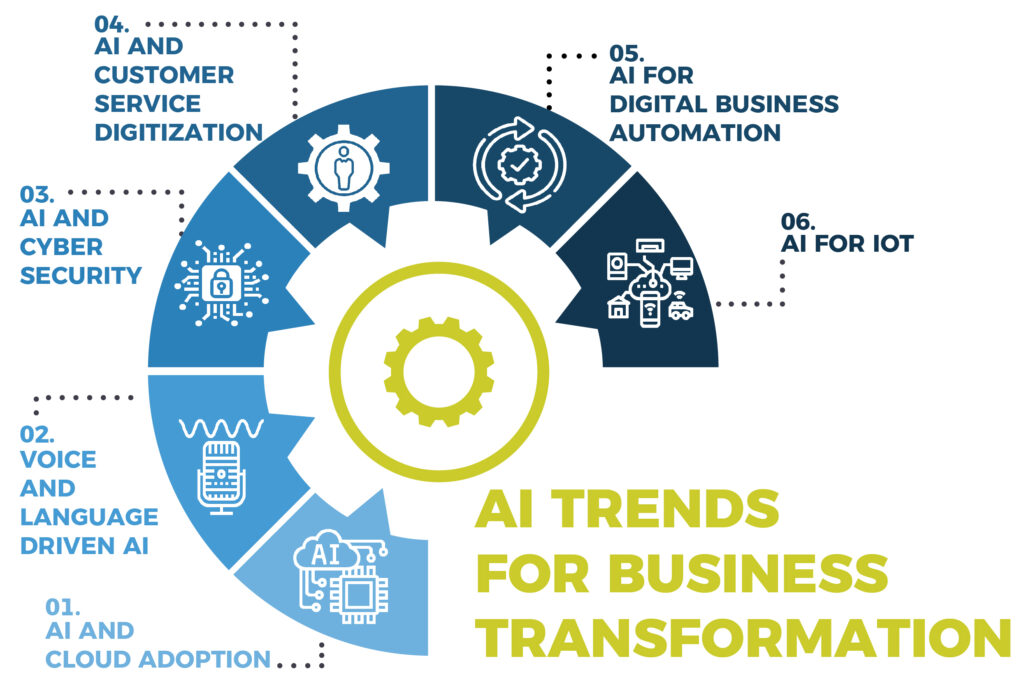

AI + Society: Transforming industries from healthcare to marketing, art, and education.